Under the current scenario of global biodiversity loss, there is an urgent need for more precise and informed environmental management1. In this sense, data derived from animal monitoring plays a crucial role in informing environmental managers for species conservation2,3. Animal surveys provide important data on population sizes, distribution and trends over time, which are essential to assess the state of ecosystems and identify species at risk4,5. By using systematic monitoring data on animal and bird populations, scientists can detect early warning signs of environmental changes, such as habitat loss, climate change impacts, and pollution effects6,7,8. This information helps environmental managers develop targeted conservation strategies, prioritize resource allocation, and implement timely interventions to protect vulnerable species and their habitats2,9,10. Furthermore, bird surveys often serve as indicators of local ecological conditions, given birds’ sensitivity to environmental changes, making them invaluable in the broader context of biodiversity conservation and ecosystem management11. However, monitoring birds, as any other animal, is highly resource-consuming. Thus, automated monitoring systems that are able to reduce the investment required for accurate population data are much needed.

The first step to create algorithms that detect species automatically is to create datasets with information on the species traits to train those algorithms. For example, a common way to classify species is by their vocalizations12. For this reason, organizations such as the Xeno-Canto Foundation (https://xeno-canto.org) compiled a large-scale online database13 of bird sounds from more than 200,000 voice recordings and 10,000 species worldwide. This dataset was crowsourced and today it is still growing. The huge amount of data provided by this dataset has facilitated the organization of challenges to create bird-detection algorithms using acoustic data in understudied areas, such as those led by Cornell Lab (https://www.birds.cornell.edu). This is the case of BirdCLEF202314, or BirdCLEF202415, which used acoustic recordings of eastern African and Indian birds, respectively. While these datasets contain many short recordings from a wide variety of different birds, other authors have released datasets composed of fewer but longer recordings, which imitate a real wildlife scenario. Examples of this are NIPS4BPlus16, which contains 687 recordings summing a total of 30 hours of recordings or BirdVox-full-night17, which has 6 recordings of 10 hours each.

Although audio is a common way to classify bird species and the field of bioacoustics has increased tremendously in the latest years, another possible approach to identify species automatically is using images18. One of such bird image datasets is Birds52519, which offers a collection of almost 90,000 images involving 525 different bird species. Another standard image dataset is CUB-200-201120, which provides 11,788 images from 200 different bird species. This dataset not only provides bird species, but also bounding boxes and part locations for each image. There are also datasets aimed at specific world regions like NABirds21, which includes almost 50,000 images from the 400 most common birds seen in North America. This dataset provides a fine-grained classification of species as its annotations differentiate between male, female and juvenile birds. These datasets can be used to create algorithms for the automatic detection of the species based on image data.

However, another important source of animal ecology information that has been much less studied because of the technological challenges of its use are videos. Video recordings may offer information not only about which species are present in a specific place, but also about their behavior. Information about animal behavior may be very relevant to inform about individual and population responses to anthropogenic impacts and has therefore been linked to conservation biology and restoration success22,23,24,25. Besides its potential for animal monitoring and conservation, the number of databases on wildlife behavior are more limited. For example, the VB100 dataset26, comprises 1416 clips of approximately 30 seconds. This dataset involves 100 different species from North American birds. The unique dataset comprised by annotated videos with birds behavior available in the literature is the Animal Kingdom dataset27, which is not specifically aimed at birds and contains annotated videos from multiple animals. Specifically, it contains 30,000 video sequences of multi-label behaviors involving 6 different animal classes; however, the number of bird videos was not specified by the authors. Table 1 summarizes the main information of the datasets reviewed.

Due to the scarcity of datasets involving birds videos annotated with its behaviors, this study proposes the development of the first fine-grained behavior detection dataset for birds. Differently from Animal Kingdom, where a video is associated with the multiple behaviors happening, in our dataset, spatio-temporal behavior annotations are provided. This implies that videos are annotated per-frame, where the behavior happening and the location is annotated in each frame (i.e., bounding box). Moreover, the identification of the bird species appearing in the video is also provided. The proposed dataset is composed by 178 videos recorded in Spanish wetlands, more specifically in the region of Alicante (southeastern Spain). The 178 videos expand to 858 behavior clips involving 13 different bird species. The average duration of each of the behavior clips is 19.84 seconds and the total duration of the dataset recorded is 58 minutes and 53 seconds. The annotation process involved several steps of data curation, with a technical team working alongside a group of professional ecologists. In comparison to other bird video datasets, ours is the first to offer annotations for species, behaviors, and localization. Furthermore, Visual WetlandBirds is the first dataset to provide frame-level annotations. A features’ comparison of bird video datasets is presented in Table 2.

Table 3 reflects the different species collected for the dataset, distinguishing between their common and scientific names. The number of videos and minutes recorded for each species is also included.

Seven main behaviors were identified as key activities recorded in our dataset. These represent the main activities performed by waterbirds in nature28. In Table 4, these behaviors are specified alongside the number of clips recorded per each of them and the mean duration of each behavior in frames. A clip is a piece of video where a bird is performing a specific behavior.

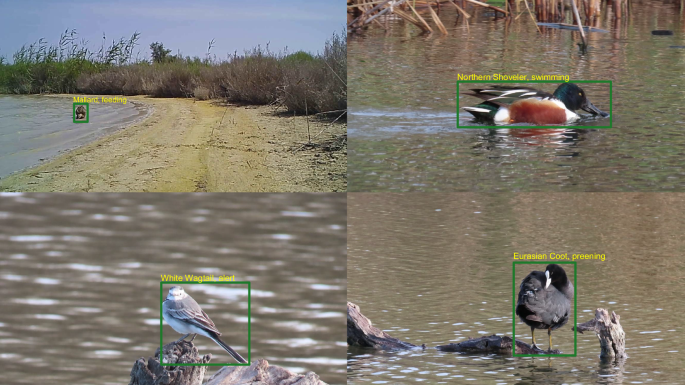

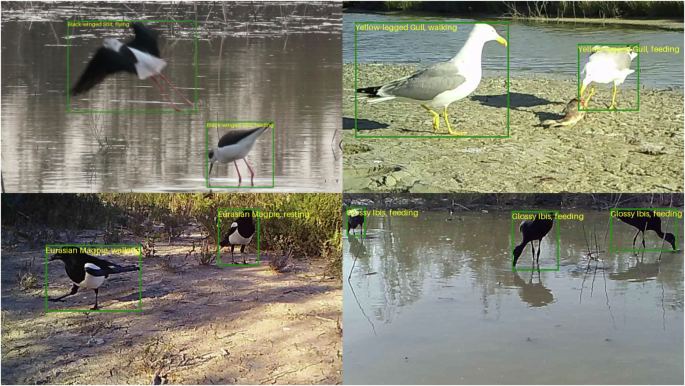

Figure 1 presents sample frames where only a single bird individual can be distinguished. However, this dataset contains not only videos with a single individual, but also videos where several birds appear together. This is the case for gregarious species, which are species that concentrate in an area for the purpose of different activities. Although the individuals of gregarious species often share the same behavior at the same time, it is also common that several behaviors can be seen in the same video at the same time. Figure 2 shows some sample frames where this phenomenon happens. Videos involving different birds and/or performing different activities sequentially were cut in clips where a unique individual is performing a unique behavior in order to get the statistics shown in Table 4.

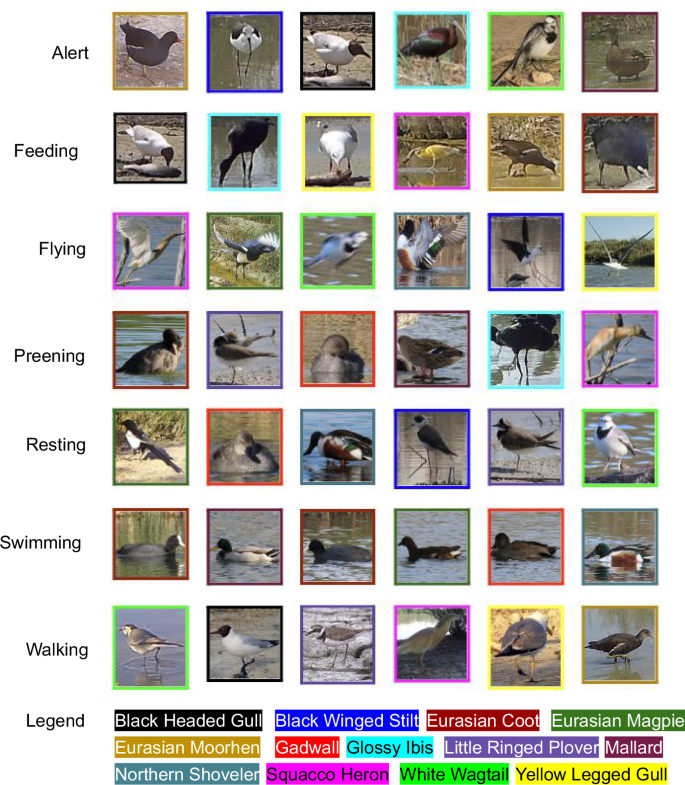

Between the seven behaviors proposed, it should be underlined the difference between the Alert, Preening and Resting behaviors. These action distinctions were established by the ecology team. We considered that the bird was Resting when it was standing without making any movement. The bird was performing the Alert behavior when it was moving its head from one side to another, moving and looking around for possible dangers. Finally, we considered that the bird was Preening when it was standing and cleaning its body feathers with its beak. The remaining behaviors are not explained because of their obvious meaning.

As it can be seen in Table 4, the number of clips per behavior is unbalanced between classes. This is because the recording of videos where some specific behaviors are happening is more uncommon, as happens with Flying or Preening, which represent the activities with the lowest number of clips in the dataset. These behaviors are difficult to record since they are performed with a lower frequency. In order to be able to collect more data on these less common behaviors, more hardware and human resources (i.e., cameras and professional ecologists) are needed to cover a wider area of the wetlands. Furthermore, another technique like data augmentation29 can generate synthetic data from the actual one. While allocating more human and hardware resources would ensure that the quality of the new data remains high, it is also costly as high-quality cameras and extra ecology professionals are expensive. On the counterpart, synthetic generation techniques are being highly used in current research as they provide a costless way of increasing the amount of data available for training. Although costless, synthetic data can decrease the quality of the dataset, so a trade-off between real and synthetic data would be necessary to limit the cost without compromising the quality of the videos. Although the unbalanced nature of the behaviors, no balancing technique over this data was applied in the released dataset in order to maximize the number of different environments captured, ensuring in this way the variability of contexts where the birds are recorded.

Additionally, Table 4 also shows the mean duration of the clips per behavior. It is worth noting the difference in the number of frames between Flying, that represents the minimum with 61 frames with respect to Swimming, which represents the absolute maximum with a value of 257 frames. This difference is explained in the nature of the behaviors, as swimming is naturally a slow behavior, which can be performed for a long time over the same area. However, flying is a fast behavior, and the bird quickly get outs of the camera focus, especially for videos obtained by camera traps, which cannot follow the bird while it is moving.

In order to collect the videos, we deployed a set of camera traps and high quality cameras in Alicante wetlands. The camera traps were able to automatically record videos based on the motion detected in the environment. We complemented the camera trap videos with recordings from high quality cameras. In these videos, a human is controlling the focus of the camera, obtaining better views and perspectives of the birds being recorded. Species recorded, behaviors identified and the camera deployment areas were described by professional ecologist based on their expertise. In Fig. 3 some video frame crops can be observed, where all the bird species developing the different behaviors available in the dataset can be seen.

After the data collection, a semi-automatic annotation method composed by an annotation tool and a deep learning model was used in order to get the videos annotated. After the annotation, a cross-validation was conducted to ensure the annotation quality. This method is deeply explained in the next section.

In order to test the dataset for species and behavior identification, two baseline experimentation were carried out: one for the bird classification task, which involves the classification of the specie and the correct localization of the bird given input frames, and a second one for the behavior detection task, which involves the correct classification of the behavior being performed by one bird during a set of frames.